We'll look at a couple examples of solving the diffusion equation for different geometries and boundary conditions. We'll start off with the common Python libraries numpy and scipy and solve these problems in an somewhat "hacky" sort of way. This will enable us to solve Dirichlet boundary value problems. You'll see why I say this is a bit of a hack as we go set up the problem. Then we'll look at solving the same types of problems using the Assimulo package which a Python interface built around the Sundials differential algebraic equation solves put out by Lawrence Livermore National Laboratory. This will enable us to solve problems with Neumann boundary conditions as well.

The reason for not just using Assimulo from the start is scipy is a pretty standard library while Assimulo is more specialized and needs a C and FORTRAN compiler to install on Linux. I haven't yet tried installing it on Windows. I wanted this to be useful for those who don't have the tools available, or the permission to buld the package from scratch such as might be the case in a company's work environment.

In the interest of brevity, I'll only do 1-dimensional problems here and in a short follow-up, expand the ideas here to more complicated 2-dimensional problems.

As a last bit of administrivia, I will build the time derivative vectors using a for-loop for the main part of this code. I think this a bit clearer for the most part, but in general is not as computationally efficient. The building of the derivatives and residuals should ideally be done in a vectorized manner. I'll present code that does this in the appendix with a little description, but will stick to the for-loop for the most part.

So, with all that said, let's begin.

We'll start off with a 1-dimensional diffusion equation and look to solve for the temperature distribution in a rod whose end points are clamped at different fixed temperatures. For the sake of simplicity, we'll assume the diffusion coefficient is constant.

Our strategy will be to approximate the PDE by a system of ordinary differential equations which can be solved by existing ODE solvers. This is done by discretizing the spatial derivatives leading to an ordinary differential equation that describes the time evolution of the temperature at each grid point. Boundary and initial conditions gives us some algebraic equations that provide constraints on this system.

The temperature as a function of position and time is given by the 1-d diffusion equation,

\begin{equation}

\frac{\partial T}{\partial t} = D\frac{\partial^2 T}{\partial x^2},

\label{eq:1d_diffusion}

\end{equation}

where $t$ is time, $x$ is the position along the rod, $D$ is the diffusion coefficient, and $T$ is the temperature at a given position and time.

We will impose the boundary conditions $T(t, x = 0) = T_L$ and $T(t, x = L) = T_R$ where $L$ is the length of the rod. These are Dirichlet boundary conditions where the value of the function is specified at the boundaries. Later on, we'll touch briefly on Neumann conditions, where the derivative at the boundaries are known.

We will use a finite difference method discretizing the spatial component of our equation. We will use a central difference formula and approximate the second derivative at the $i$th point as,

\begin{equation}

\frac{\partial^2 T}{\partial x^2_i} \approx \frac{ T_{i+1} - 2T_i + T_{i-1}}{\Delta^2}.

\label{eq:central_difference}

\end{equation}

The subscript $i$ in Eq. \ref{eq:central_difference} again reffers to the $i$th grid point on our rod, and $\Delta$ is the distance between grid points. Here, we've assumed a uniform spacing for simplicity.

If there are a total of $N$ points, we can rewrite Eq. \ref{eq:1d_diffusion} as

a system of $N-2$ ODEs and two algebraic constrain equations for our boundary conditions.

\begin{align}

T_1 & = T_l \nonumber \\

\frac{dT_2}{dt} & = & D\left(\frac{ T_3 - 2T_2 + T_1}{\Delta^2}\right)\nonumber \\

\frac{dT_3}{dt} & = & D\left(\frac{ T_4 - 2T_3 + T_2}{\Delta^2}\right)\nonumber \\

&\vdots& \\

\frac{dT_{N-2}}{dt} & = & D\left(\frac{ T_{N-1} - 2T_{N-2} + T_{N-3}}{\Delta^2}\right)\nonumber \\

\frac{dT_{N-1}}{dt} & = & D\left(\frac{ T_{N} - 2T_{N-1} + T_{N-2}}{\Delta^2}\right) \nonumber \\

T_N & = T_r\nonumber.

\label{eq:difference_loop}

\end{align}

Now to code this. Scipy has a built-in differential equation solver solve_ivp included in the scipy.integrate package. It solves equations of the form $dy/dt = f(t, y)$, and uses a Runge-Kutta type algorithm by default. The function passed to the solver does not take addition arguments. Therefore, to pass other parameters to the solver, we need to use either a nested function or create a class and use class variables to hold additional information. For the first examples, we will use the first method, and when we look at Assimulo later, we will use the second.

# Import numpy and the ODE solver from Scipy import numpy as np from scipy.integrate import solve_ivp # Import plotting functionality import matplotlib.pyplot as plt def diffusion(T, t, D, N, L, Tl, Tr): # Calculate spacing between points delta = float(L) / float(N) def equations(t, T): # Boundary conditions set explicitly. This is probably redundant # as these numbers are set before the function is called and the # code takes the time derivative at these points to be zero, but # we'll ensure the proper boundary conditions anyway. T[0] = Tl T[-1] = Tr # Initialize time derivative vector Tprime = np.zeros( (N) ) # We are being lazy and forcing the temperature of the boundaries # not to change by setting the derivative to zero at those points Tprime[0] = 0.0 Tprime[-1] = 0.0 # Implement the diffusion equation in the interior points using the # central difference formula. This can be vectorized for better # performance, but I am just using a loop here. for i in range(1, N - 1): Tprime[i] = D * (T[i+1] - 2 * T[i] + T[i-1]) / delta**2 return Tprime # Solve the equation by calling solve_ivp and return the solution sol = solve_ivp(equations, [tspan[0], tspan[-1]], T, t_eval = tspan) return sol # Divide the rod into N points, 100 in this case N = 100 L = 1 # Rod length D = 0.01 # Diffusion coefficient Tl = 0. # Left-hand boundary condition Tr = 10.0 # Right-hand boundary condition # We are interested in t = 2 to 2 = 2 seconds tspan = np.linspace(0, 2) # Initial conditions T = 5.0 * np.ones( (N) ) # These are the boundary conditions. T[0] = Tl T[-1] = Tr # Solve the problem by calling our function which invokes the solver sol = diffusion(T, tspan, D, N, L, Tl, Tr) # Plot the solution plt.plot(np.linspace(0, 1, N), sol.y[:, -1], 'k') plt.xlabel('Position (m)') plt.ylabel('Temperature ($^\circ$C)') plt.grid(True) plt.show()

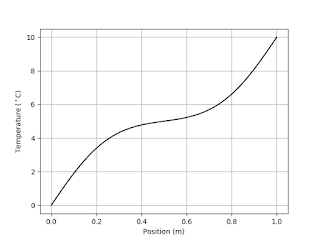

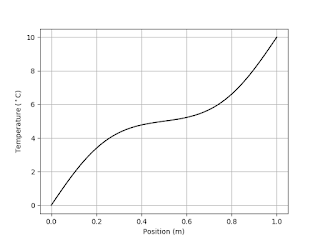

The is rather simple, so I've just presented the entire thing here in one block. We took the diffusion constant $D$ to be 0.01, the length of the rod $L$ to be 1m, and the initial temperature to be 5 degrees. The important part of this is the function equations which does the discretization and gives an ODE for each point of our grid. I've set the boundary conditions explicitly and set the time derivative at the two end point to zero to ensure they do not change.

Running this gives the following result for the temperature profile after two seconds.

|

| Figure 1: Temperature profile in the rod after two seconds |

The proper way to approach this is to use a differential algebraic equation (DAE) solver. DAEs, as the name implies, are systems of both ODEs and algebraic equations. They are generally written in the form of,

\begin{align}

\frac{dx}{dt} & = f(x(t), y(y), t) \\

\label{eq:DAE}

0 & = g(x(t), y(t), t). \nonumber

\end{align}

Assimulo comes with a DAE solver as part of the package and we can make use of that directly. This will enable us to handle more involved boundary conditions.

The code is more or less the same as above with a couple important exceptions. The first is the form of the function that defines Eq. 4. The solver expects an equation in the form,

\begin{equation}

0 = F\left( t, y, \frac{dy}{dt} \right).

\label{eq:DAE_res}

\end{equation}

So we write our function to have the form of res = f(t, y, yprime). where the variable res is the residual. As I noted earlier, I wrapped the solver function in a class this time rather than using nested functions.

# Import numpy import numpy as np # Import needed Assimulo libraries from assimulo.solvers import IDA from assimulo.problem import Implicit_Problem # Import plotting libraries import matplotlib.pyplot as plt class diffusion: def __init__(self, t, T, D, N, L, Tl, Tr): # Calculate spacing between points self.delta = float(L) / float(N) # Assign the variables passed into the constructor to class # variables. self.N = N self.D = D self.L = L self. Tl = Tl self. Tr = Tr # Initial guesses for T and Tprime (T should be OK as that is just # our initial temperature distribution T0 = T Tprime0 = np.zeros( T.shape ) t0 = 0.0 # Set which variables are to be treated as differential or # algebraic. algvar = [True] * T.shape[0] algvar[0] = False algvar[-1] = False # Create the model object and pass initial guesses at T and # Tprime. The code will then solve for consistent initial # conditions. model = Implicit_Problem(self.equations, T0, Tprime0, t0) # Create a simulation object from the IDA DAE solve and pass in # our model information. sim = IDA(model) # Bind our algvar variable to the sim object sim.algvar = algvar # Calculate consistent initial conditions. See IDA and Sundials # documentation for more information. sim.make_consistent('IDA_YA_YDP_INIT') # Integrate the system and bind the results to class variables. self.t, self.T, self.Tprime = sim.simulate(t[-1], 100) # Define the system of equations def equations(self, t, T, Tprime): # Allocate the residual vector res = np.zeros( T.shape ) # Set up boundary conditions res[0] = T[0] - Tl res[-1] = T[-1] - Tr # Calculate second derivative terms for i in range(1, N - 1): res[i] = Tprime[i] - D * (T[i+1] - 2 * T[i] + T[i-1]) \ / self.delta**2 # Return the residual vector return res # Divide the rod into N points, 100 in this case N = 100 L = 1 # Rod length D = 0.01 # Diffusion coefficient Tl = 0. # Left-hand boundary condition Tr = 10.0 # Right-hand boundary condition # Define a time vector and set the initial temperature t = np.linspace(0, 2) T = 5.0 * np.ones( (N) ) # Run the code to solve the problem problem = diffusion(t, T, D, N, L, Tl, Tr) # plot the results x = np.linspace(0, L, N) plt.plot(x, problem.T[-1, :], 'k') plt.xlabel('Position (m)') plt.ylabel('Temperature ($^\circ$C)') plt.grid(True) plt.show()

The other issue is that unlike ODEs, where for a first order equation we need only provide the initial values, in the case of DAEs, we need to provide both the initial values as well as the initial values of the first derivatives. We don't know the value of these derivatives which presents a problem We could write our own code to calculate these, but fortunately, the IDA solver can work this out for us without us having to much other than tell the solver which variables are to be treated as algebraic and which are differential.

The line,

sim.make_consistent('IDA_YA_YDP_INIT')

# Set which variables are to be treated as differential or # algebraic. algvar = [True] * T.shape[0] algvar[0] = False algvar[-1] = False

# Bind our algvar variable to the sim object sim.algvar = algvar

The rest of the code is essentially the same as the previous. Running this gives,

|

| Figure 2: Output of Assimul integration. |

You can see the utility of using this method as opposed to Scipy's internal solvers right away. Say we had the boundary constraint,

\begin{equation}

\frac{dT}{dx}_{x = L} = C.

\end{equation}

We can handle this by replacing the current boundary condition for the right-hand side with this line of code:

res[-1] = (x[-1] - x[-2]) / Delta - C

Appendix - Vectorization

I have said in other articles, one wants to avoid looping over arrays and matrices when using libraries like Numpy and Scipy. These packages are typically written in highly optimized C or FORTRAN code and compiled into libraries called by Python. As these binary libraries are much faster than code running through an interpreter, it is a good idea to try to write things to take advantage of this fact. When I learned numerical techniques, we were writing the code in C or C++, so this was less of an issue. I presented the code above using loops because I find that a little more intuitive, but I would be remiss if I didn't at least give a vectorized version.Consider Eqs. \ref{eq:central_difference} and 3. With the exception of the first and last row, these equations can be though of as the following matrix equation,

\begin{equation}

\vec{T^\prime} = \frac{D}{\Delta^2} \overline{\overline{\sigma}} \quad \vec{T},

\label{vecotr}

\end{equation}

where $\vec{T^\prime}$ and $\vec{T}$ are column vecrtors of the time derivative of the temperature and the temperature, respectively. The matrix $\overline{\overline{\sigma}}$ is a sparse, tridiaonal matrix whose elements are,

\begin{equation}

\left(\begin{matrix}

\cdots & \cdots & \cdots & \cdots & \cdots & \cdots & \cdots & \cdots\\

1 & -2 & 1 & 0 & 0 & 0 & \cdots& 0 \\

0 & 1 & -2 & 1 & 0 & 0 & \cdots & 0 \\

\vdots & \vdots & \vdots & \vdots & \vdots & \vdots & \ddots & \vdots \\

0 & 0 & 0 & 0 & 0 & 1 & -2 & 1 \\

\cdots & \cdots & \cdots & \cdots & \cdots & \cdots & \cdots & \cdots\\

\end{matrix}\right).

\label{eq:matrix}

\end{equation}

The first and last rows of the matrix are where we'd set the boundary conditions. When we build the matrix, we explicitly tell Scipy to use a sparse matrix representation. Most of the entries in Eq. \ref{eq:matrix} are zeros. Scipy is smart enough to not allocate memory for all the zero entries and to not multiply out all the zeros when evaluating Eq. 7. This is very useful, as these matrices can get large fast.

The code is essentially the same as before, just with the loop replaced by matrix multiplication.

# Import numpy import numpy as np import scipy.sparse # Import needed Assimulo libraries from assimulo.solvers import IDA from assimulo.problem import Implicit_Problem # Import plotting libraries import matplotlib.pyplot as plt # Import time module to compare speed differences import time class diffusion: def __init__(self, t, T, D, N, L, Tl, Tr): # Calculate spacing between points self.delta = float(L) / float(N) # Assign the variables passed into the constructor to class # variables. self.N = N self.D = D self.L = L self. Tl = Tl self. Tr = Tr # Initial guesses for T and Tprime (T should be OK as that is just # our initial temperature distribution T0 = T Tprime0 = np.zeros( T.shape ) t0 = 0.0 # Set which variables are to be treated as differential or # algebraic. algvar = [True] * T.shape[0] algvar[0] = False algvar[-1] = False # Create the model object and pass initial guesses at T and # Tprime. The code will then solve for consistent initial # conditions. model = Implicit_Problem(self.equations, T0, Tprime0, t0) # Create a simulation object from the IDA DAE solve and pass in # our model information. sim = IDA(model) # Bind our algvar variable to the sim object sim.algvar = algvar # Calculate consistent initial conditions. See IDA and Sundials # documentation for more information. sim.make_consistent('IDA_YA_YDP_INIT') # Integrate the system and bind the results to class variables. self.t, self.T, self.Tprime = sim.simulate(t[-1], 100) # Define the system of equations def equations(self, t, T, Tprime): # Allocate the residual vector res = np.zeros( T.shape ) # Set up boundar conditions res[0] = T[0] - Tl res[-1] = T[-1] - Tr # Calculate second derivative terms for i in range(1, N - 1): res[i] = Tprime[i] - D * (T[i+1] - 2 * T[i] + T[i-1]) \ / self.delta**2 # Return the residual vector return res class vectorized_diffusion: def __init__(self, t, T, D, N, L, Tl, Tr): # Calculate spacing between points self.delta = float(L) / float(N) # Assign the variables passed into the constructor to class # variables. self.N = N self.D = D self.L = L self. Tl = Tl self. Tr = Tr diagonals = [ np.ones((self.N-1,)), -2. * np.ones((self.N,)), np.ones((self.N-1,)) ] self.M = scipy.sparse.diags(diagonals, [-1, 0, 1]) # Initial guesses for T and Tprime (T should be OK as that is just # our initial temperature distribution T0 = T Tprime0 = np.zeros( T.shape ) t0 = 0.0 # Set which variables are to be treated as differential or # algebraic. algvar = [True] * T.shape[0] algvar[0] = False algvar[-1] = False # Create the model object and pass initial guesses at T and # Tprime. The code will then solve for consistent initial # conditions. model = Implicit_Problem(self.equations, T0, Tprime0, t0) # Create a simulation object from the IDA DAE solve and pass in # our model information. sim = IDA(model) # Bind our algvar variable to the sim object sim.algvar = algvar # Calculate consistent initial conditions. See IDA and Sundials # documentation for more information. sim.make_consistent('IDA_YA_YDP_INIT') # Integrate the system and bind the results to class variables. self.t, self.T, self.Tprime = sim.simulate(t[-1], 100) # Define the system of equations def equations(self, t, T, Tprime): # Allocate the residual vector res = Tprime - self.D / self.delta**2 * self.M * T # Set up boundar conditions res[0] = T[0] - Tl res[-1] = T[-1] - Tr # Return the residual vector return res # Divide the rod into N points, 100 in this case N = 100 L = 1 # Rod length D = 0.01 # Diffusion coefficient Tl = 0. # Left-hand boundary condition Tr = 10.0 # Right-hand boundary condition # Define a time vector and set the initial temperature t = np.linspace(0, 2) T = 5.0 * np.ones( (N) ) # Run the code with the loop to solve the problem. Also calculate the # time taken to run the code. start_time = time.time() problem = diffusion(t, T, D, N, L, Tl, Tr) end_time = time.time() print('Run time with for-loop = ', end_time - start_time) # Run the vectorized version and calculate the time. start_time = time.time() problem_vectorized = vectorized_diffusion(t, T, D, N, L, Tl, Tr) end_time = time.time() print('Run time with vectorized = ', end_time - start_time) # plot the results x = np.linspace(0, L, N) plt.plot(x, problem_vectorized.T[-1, :], 'b.', label = 'Vectorized') plt.plot(x, problem.T[-1, :], 'k', label = 'For-loop') plt.xlabel('Position (m)') plt.ylabel('Temperature ($^\circ$C)') plt.grid(True) plt.legend() plt.show()

For small numbers of grid points, the vectorization speedup is between a factor of three and four. With $N = 1000$, the vectorized code takes 72 seconds to run vs. 6000 seconds for the nonvectorized code. So use vectorization if you can!

See Also

For more information on finite difference methods, check out the Wikipedia article on the subject.

The Wikipedia article on DAEs has some basic information.

The Assimulo project

The SUNDIALS page at Lawrence Livermore National Laboratory.